Feedback to the Proposed Admissions and Consumer Transparency Supplement (ACTS)

The U.S. Department of Education has proposed the Admissions and Consumer Transparency Supplement (ACTS) to be added to IPEDS beginning in the 2025–26 collection cycle. This new survey would require institutions to report detailed undergraduate and graduate admissions and enrollment metrics—many of which are not currently collected through IPEDS.

In response, AIR (in partnership with AACRAO, ACE, APLU, and NAICU) conducted a survey to gather feedback from higher education professionals like those in institutional research and effectiveness, enrollment management, and registrars. The goal was to ensure that campus perspectives inform both public comments and advocacy efforts during the federal review period.

Institutional Admissions Policy

Respondents were asked to indicate their admissions selectivity.

- Open access: Respondents from predominantly public 2-year institutions (14% of total respondents)

- Selective: Most common among private nonprofit and public 4-year institutions (69% of total respondents)

- Highly selective: More common among private, not-for-profit, 4-year institutions (17% of total respondents).

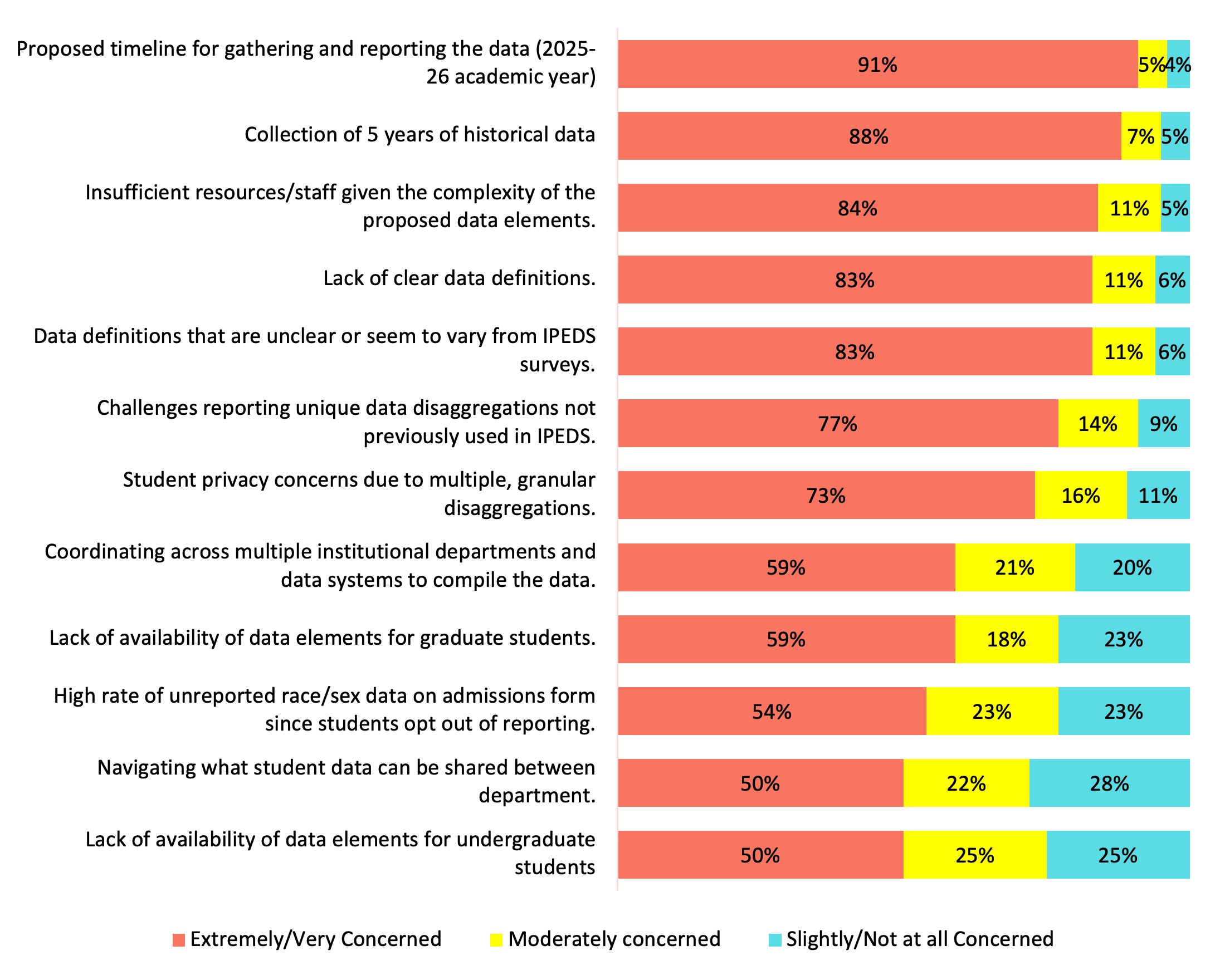

Degree of Concern with Potential Barriers

Respondents expressed high levels of concern with multiple aspects of the proposed ACTS data collection; see Chart 1. The top concerns were:

- Proposed timeline: 91% reported being extremely or very concerned

- Collection of historical data: 88% are extremely/very concerned

- Insufficient staff time or capacity: 84% are extremely/very concerned

A significant majority indicated they were Extremely or Very concerned about at least one issue which demonstrates the perceived burden and uncertainty surrounding the rollout.

Chart 1. Degree of Concern with Potential Barriers

Respondents raised a range of data quality concerns in their open-ended comments. The themes below reflect the most common issues, illustrated with direct quotes.

Limited Availability and Accessibility of Required Data: Many respondents noted that the proposed data elements are not readily available, are spread across systems, or are difficult to extract.

- “Lack of availability of data elements and insufficient resources to gather them.”

- “Time, staffing, availability of data, coordination among departments.”

- “We don’t currently track some of these data points.”

Burden of Historical Data Retrieval: Concerns about the difficulty of finding or reconstructing five years of historical data for new variables.

- “Burden of gathering historical data that hasn’t been previously reported.”

- “Finding the 5-year historical data is a challenge.”

- “We’re not confident we can accurately retrieve older data elements.”

Accuracy of New Metrics (e.g., Test Scores, High School GPA, Family Income): Respondents questioned the consistency and quality of source data for new proposed metrics, especially self-reported fields.

- “Accuracy of high school GPA and test score data.”

- “Inconsistent reporting of income or first-generation status.”

- “Not all schools require standardized test scores anymore.”

Ambiguity in Definitions and Reporting Guidance: Respondents emphasized the lack of clear definitions for key terms and reporting instructions.

- “Lack of clear definitions or guidance on several metrics.”

- “We need clarity on what is expected - definitions are vague.”

- “Unclear how some elements should be calculated or attributed.”

Compressed Timeline and Resource Constraints: Respondents expressed concern about the short turnaround time for ACTS reporting, especially given staffing shortages.

- “The exceptionally short timeline would be burdensome given current staffing levels.”

- “Resources to gather the required data sets for all proposed components.”

- “We need more time and better infrastructure to implement this.”

Student Privacy and Small Cell Suppression: Respondents raised concerns about student-level privacy, especially for small programs and sensitive data.

- “Student privacy concerns, especially with small cohort sizes.”

- “Privacy risk for disaggregated data at smaller institutions.”

- “Concerns about identifying students through detailed demographic breakdowns.”

Retrieving Undergraduate and Graduate Data

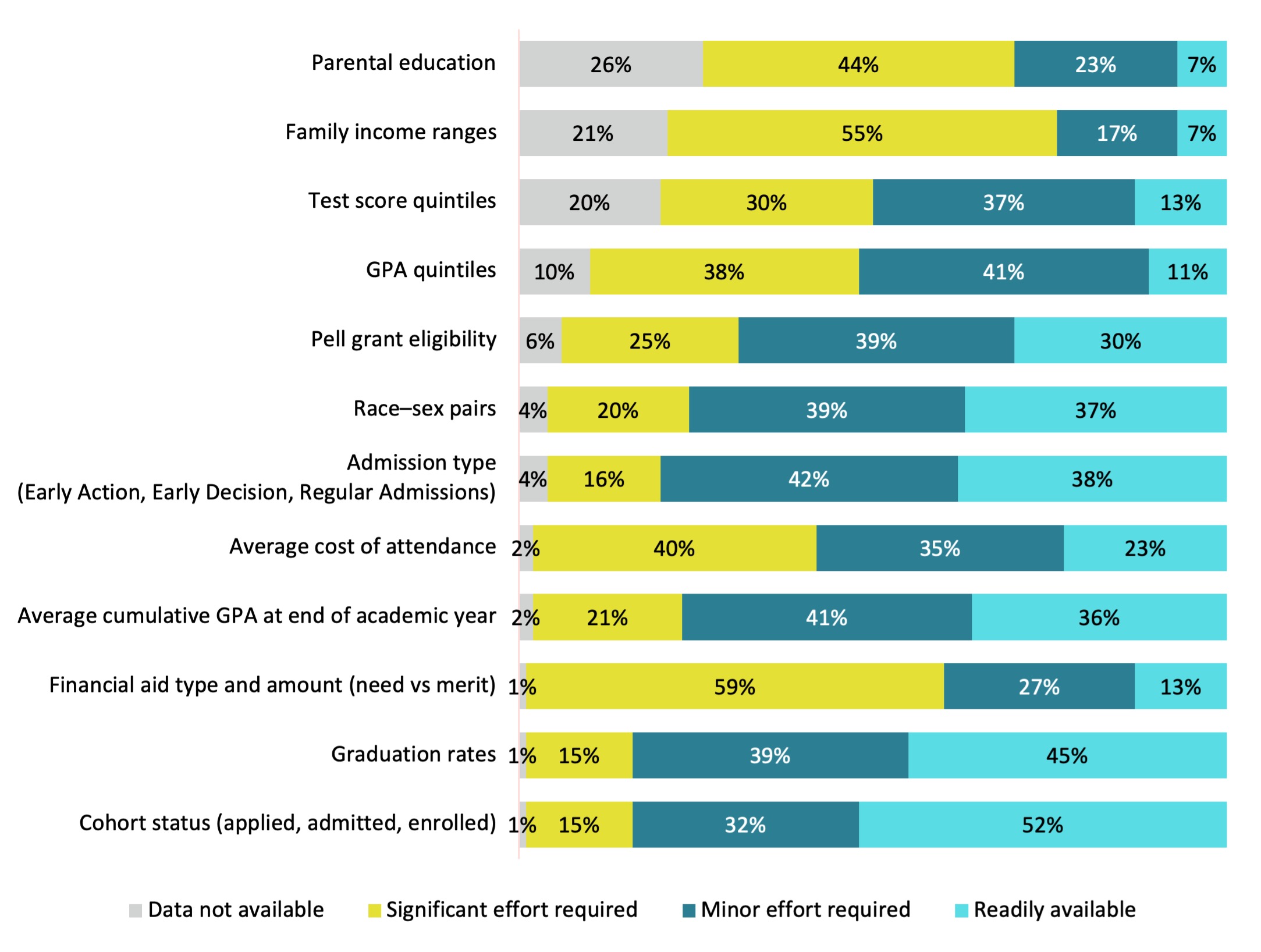

Respondents were asked to rate the level of effort required to extract several data elements relating to undergraduate students for the current and past five years (see Chart 2). The results highlight several data sets that present significant reporting challenges:

- Parental education: 70% of respondents indicated that this data is either unavailable or would require significant effort to retrieve.

- Family income ranges: 76% reported similar difficulty, citing unavailability or substantial effort needed.

- Test score quintiles: 50% indicated these data would also be difficult to obtain or reconstruct.

In contrast, several metrics appear to be readily accessible at many institutions:

- Cohort status: 84% noted this data is either immediately available or could be accessed with minimal effort.

- Graduation rates: Also cited by 84% as readily available or accessible with minor effort.

- Admission type: 88% of respondents indicated this data is already available or easy to extract.

These results illustrate a clear divide between data elements that align with existing IPEDS surveys and those that might require new reporting infrastructures and/or cross-unit coordination.

Chart 2. Effort Required to Retrieve Data for Undergraduate Students: Current and Past Five Years

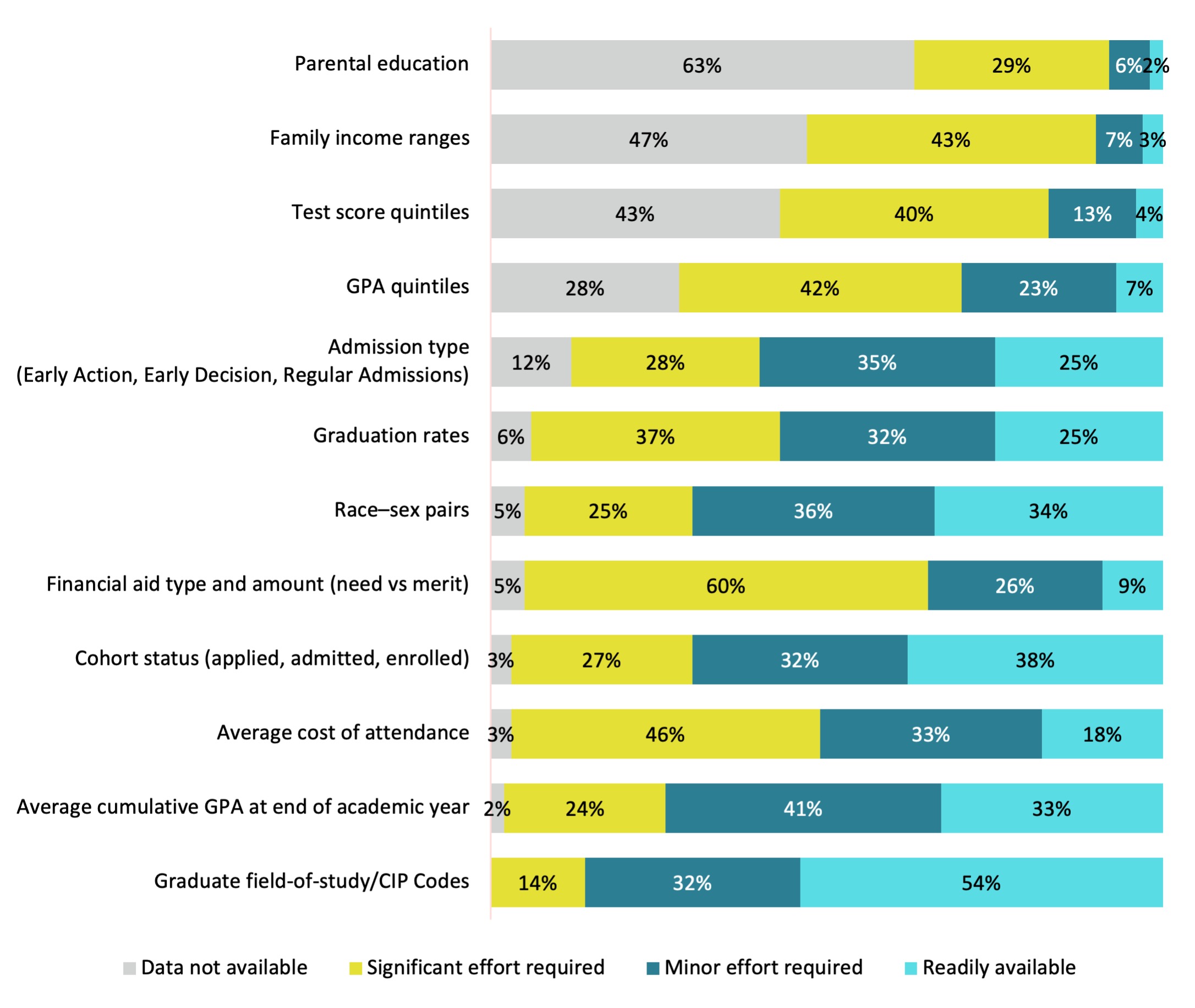

Respondents were also asked to assess the level of effort required to extract data elements related to graduate students (see Chart 3). In this context, a larger number of institutions reported that certain data elements were unavailable or difficult to retrieve, particularly Parental education, Family income ranges, and Test score quintiles.

In contrast, most institutions indicated that the following data elements could be reported with little to no effort: Cumulative GPA, Cohort status, and Race-sex pairs.

These results suggest that while some graduate student metrics align with existing data systems, others would require new data collection processes or significant system enhancements.

Chart 3. Effort Required to Retrieve Data for Graduate Students: Current and Past Five Years

Respondents were asked to identify the three metrics they found easiest and most challenging to retrieve. The results revealed clear patterns:

- Easiest: Race-Sex Pairs, GPA quintiles, Cohort status

- Most Challenging: Family income ranges, Parental Education, Test score quintiles

These findings highlight a divide between data already embedded in existing reporting structures and metrics that require new sources, cross-department coordination, or greater standardization.

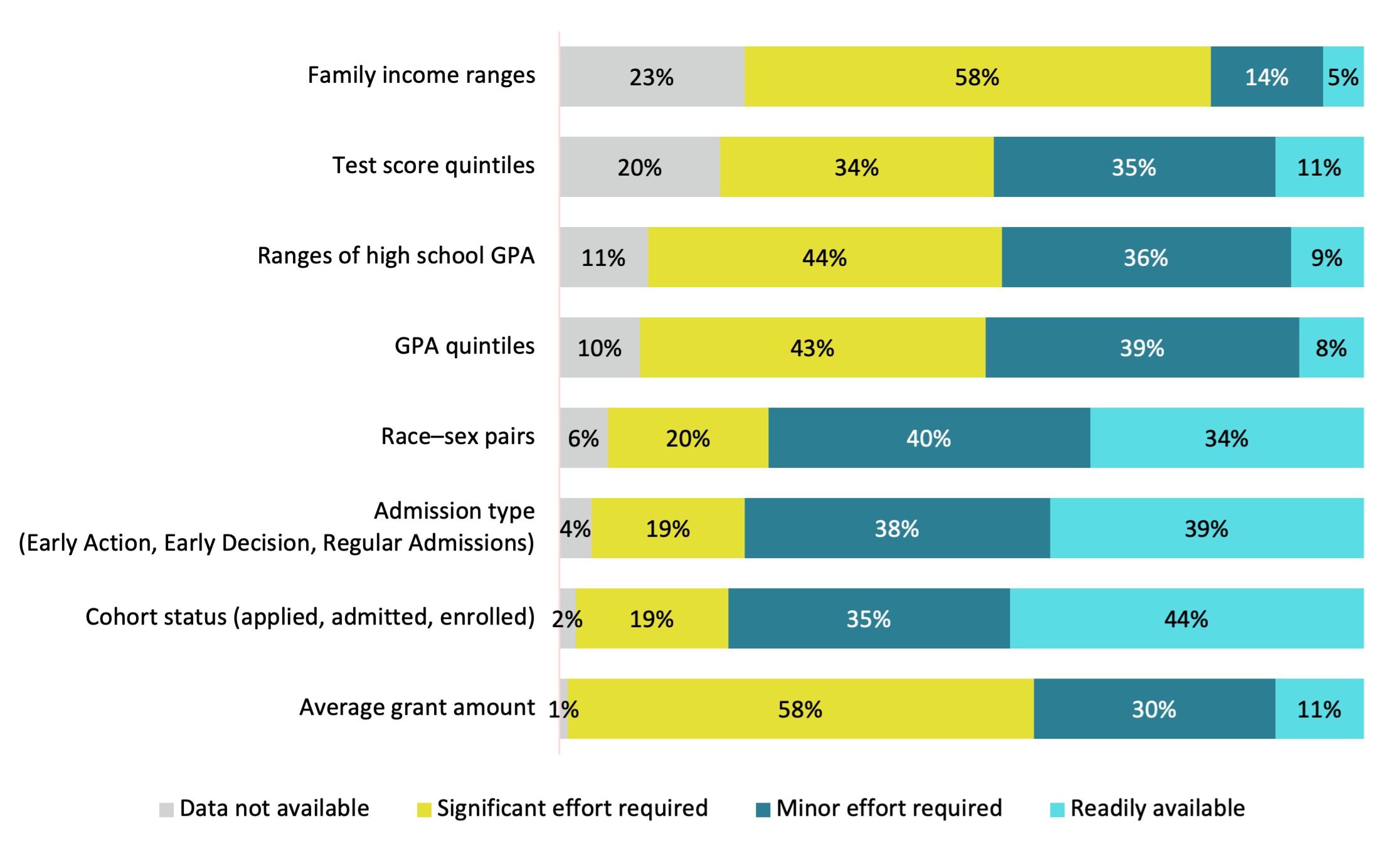

Disaggregating Undergraduate and Graduate Student Data

In addition to introducing new metrics, the proposed ACTS survey would require institutions to disaggregate data in ways not previously required by IPEDS. To assess the potential burden, respondents were asked to rate the level of difficulty their institution would face in producing these disaggregations.

Chart 4 shows the results for the level of difficulty in disaggregating data by these elements for undergraduate student data for the current and past five years. Most respondents reported that the data were either not available to perform these disaggregations or it would take significant effort for the following metrics: Family income ranges, Test score quintiles, Ranges of high school GPA, and GPA quintiles.

In contrast, some elements were considered far more manageable. More than 70% of respondents indicated that they could disaggregate data by cohort status, admission type, and race–sex pairs with little to no additional effort. These findings suggest that while some disaggregations align with existing practices, others may require substantial new effort, especially when applied retroactively across multiple years.

Chart 4. Difficulty Dissaggregating Data by These Elements for Undergraduate Student Data: Current and Past Five Years

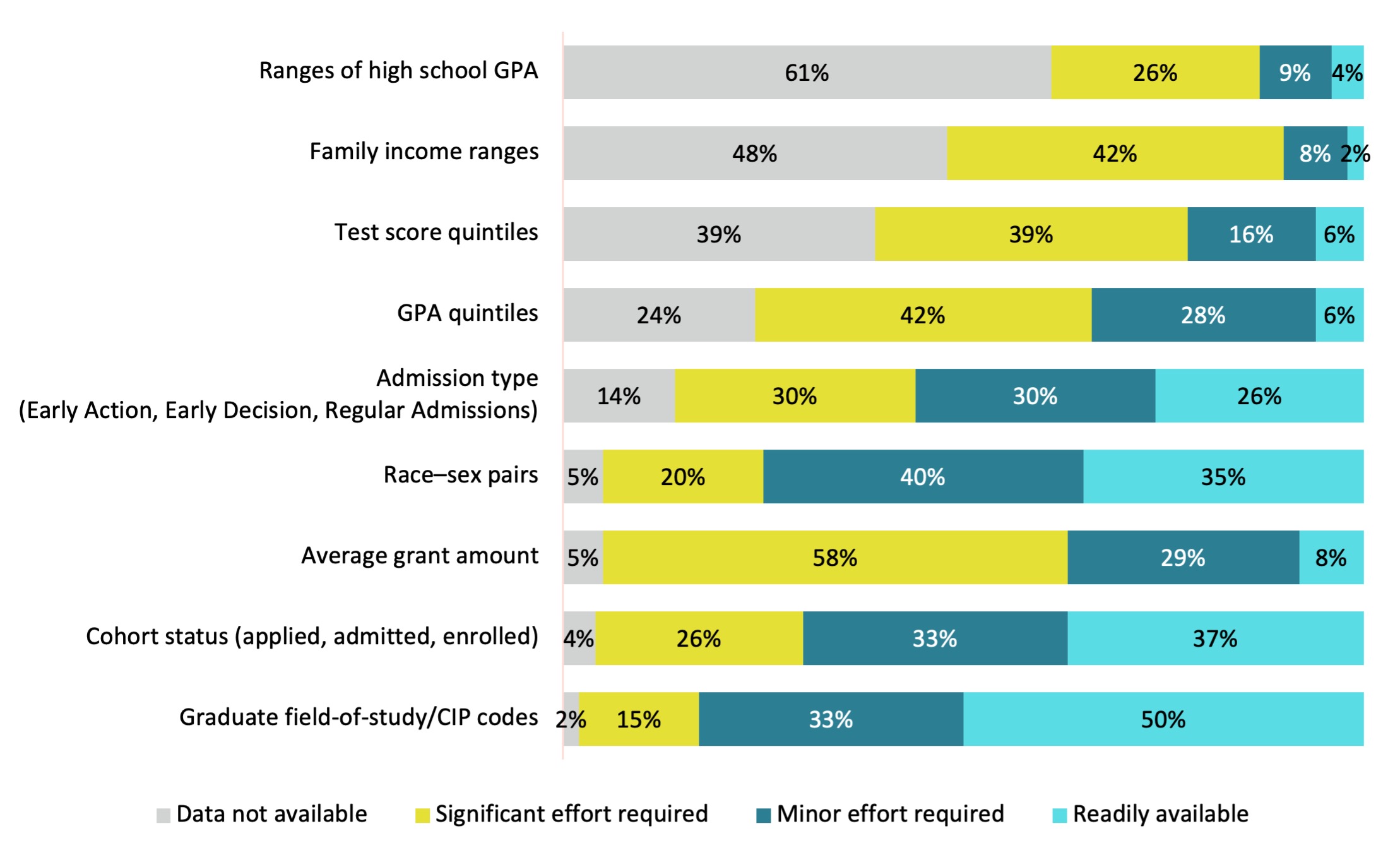

As shown in Chart 5, similar disaggregations will be required for graduate student data, with the addition of graduate field of study (CIP code) as a key element. Most respondents indicated that several metrics (high school GPA ranges, family income ranges, test score quintiles, GPA quintiles, and average grant amount) either do not exist in their current systems or would require significant effort to retrieve to support the required disaggregations.

In contrast, more than 70% of respondents reported that data on graduate field of study/CIP code, cohort status, and race–sex pairs are either readily available or could be accessed with only minor effort.

These results highlight the uneven landscape of graduate data availability, and the difficulty institutions may face in retrofitting disaggregations onto metrics not commonly tracked at the graduate level.

Chart 5. Difficulty Dissaggregating Data by These Elements for Graduate Student Data: Current and Past Five Years

Responsibility for ACTS Reporting

When asked which unit would be primarily responsible for completing the ACTS report, 93% of respondents identified the Institutional Research/Institutional Effectiveness (IR/IE) office. An additional 4% indicated the Enrollment Management or Admissions office would take the lead.

Respondents were also asked to identify which institutional units would be involved in the reporting process overall. As shown in Table 1, the results point to a highly collaborative effort: 90% cited IR/IE, 88% cited Student Financial Aid, and 87% cited Enrollment Management/Admissions as key contributors. These findings underscore that ACTS reporting is expected to be a cross-functional responsibility, requiring coordination across multiple offices and data systems.

| Unit | Aggregate |

|---|---|

| Institutional Research/Effectiveness | 90% |

| Student Financial Aid | 88% |

| Enrollment Management/Admissions | 87% |

| Registrar | 60% |

| IT | 38% |

| Bursar | 21% |

| Provost’s Office | 12% |

| President’s Office | 3% |

Note: This item was multiple response; column will not add to 100%.

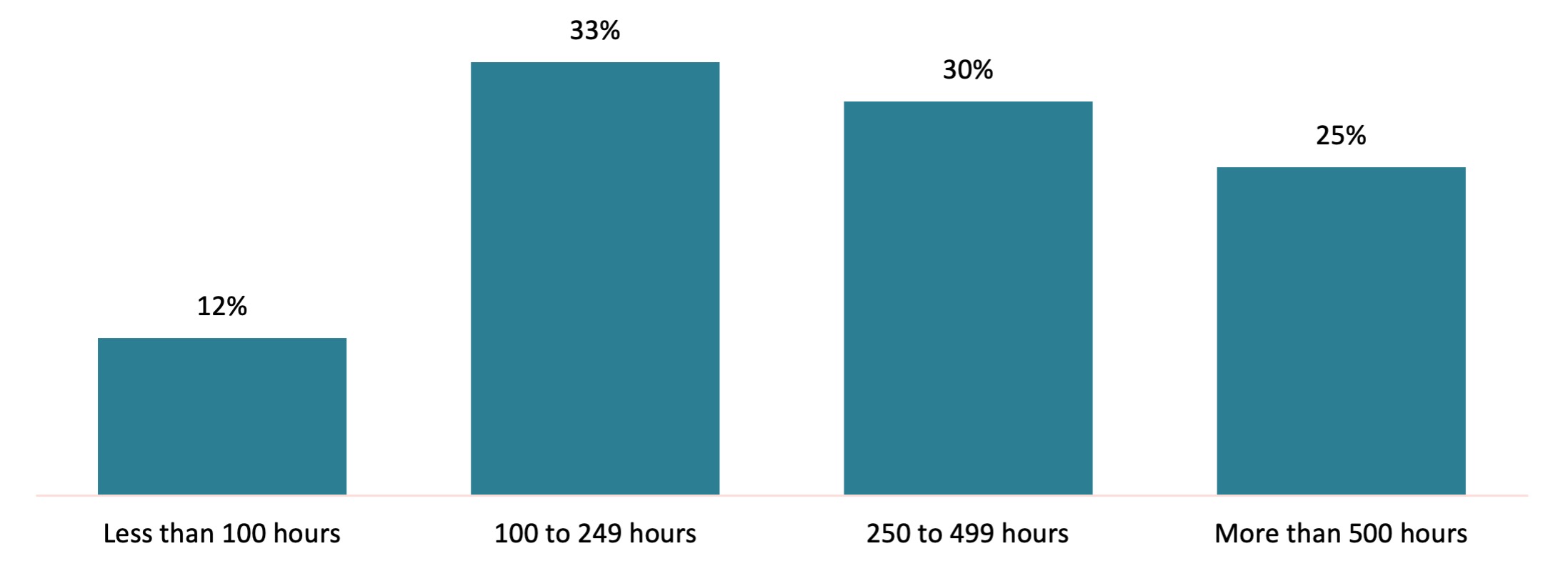

Next, respondents were asked to estimate the total amount of time it would take their institution to complete the ACTS survey (see Chart 6). The majority (55% of respondents) estimated the effort would require 250 or more hours, underscoring the significant resource demands respondents anticipate in preparing and submitting the required data.

Chart 6. Approximate Number of Hours to Complete ACTS Survey

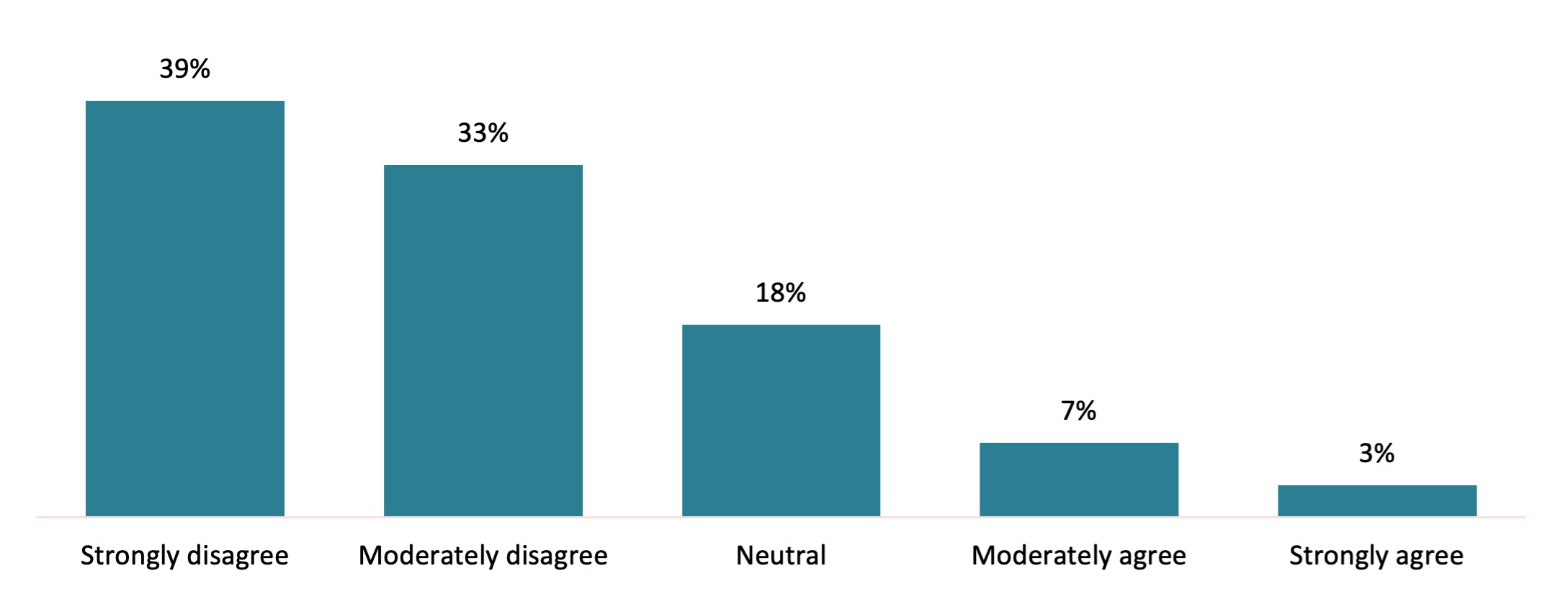

Although no official reporting deadline had been announced at the time of the survey, respondents were asked to assess their level of confidence in meeting any future ACTS deadline, given their institution’s current reporting capabilities and resources (see Chart 7).

The results reveal significant concern: 72% of respondents selected either strongly disagree or moderately disagree in response to the statement, “Given our current resources and support, I am confident our institution can meet any proposed deadline.” This lack of confidence highlights widespread uncertainty about institutional readiness and reinforces the need for realistic timelines, clear guidance, and adequate support.

Chart 7. Level of Confidence to Meet Any Reporting Deadline with Current Resources/Support

Final Comments

At the end of the survey, we asked several open-ended questions. The responses have been grouped into key themes, each illustrated with sample quotes from participants.

WHAT HURDLES WILL YOUR INSTITUTION FACE TO COMPLETE THE ACTS REPORTING?

Lack of Available or Collectable Data: Respondents noted that several required data elements for ACTS reporting are either unavailable, inconsistently collected, or were never tracked before.

- “Originally collected data availability.”

- “We’re unsure how to extract some of the new data elements.”

- “Limited staff and data availability to complete new collections.”

Limited Staffing and Capacity in Small Offices: Respondents, especially among small or single-person IR/IE offices, raised serious concerns about capacity to take on additional federal reporting.

- “Small, private institutions of higher education may have limited capacity.”

- “The IR office is already overwhelmed with FVT and GE—ACTS is yet another burden.”

- “We’re a one-person office—adding another federal collection is not feasible.”

Fragmented or Inaccessible Admissions & Financial Aid Data: Difficulties accessing relevant admissions, enrollment, and financial aid data across multiple systems or offices.

- “Accessing graduate student admissions and aid data will be challenging.”

- “We don’t store years of data in an accessible way.”

- “Admissions and financial aid systems don’t talk to each other.”

Data Quality and Student-Level Input Challenges: Concerns around inconsistent, inaccurate, or missing student-level inputs like test scores, income, or FAFSA completion.

- “Students don’t always submit FAFSA—so income data is incomplete.”

- “High school GPA, test scores, and other inputs are not reliable.”

- “We don’t ask for some of this data at all.”

Unclear Definitions and Ambiguity in Reporting Requirements: Respondents cited unclear expectations, definitions, and guidance as one of the largest hurdles to getting started.

- “The largest hurdle is the lack of clarity in the definitions.”

- “We’re not sure what’s being asked—definitions are vague.”

- “It’s unclear how to classify some of the new elements.”

Timing, Staff Burden, and Competing Priorities: Concerns about unrealistic timelines, additional workload, and staff fatigue—especially on top of other reporting mandates like FVT and GE.

- “We do not have the staff or time to comply with another federal reporting requirement.”

- “The burden of reporting keeps increasing—without additional resources.”

- “Our biggest hurdle is time—we’re already overloaded.”

WHAT VALUE DO YOU SEE IN REPORTING SOME OR ALL OF THIS INFORMATION?

Benchmarking and National Comparisons: Respondents saw value in using ACTS data to compare institutions, improve decision-making, and support external benchmarking.

- “Benchmarking (if this information becomes publicly available).”

- “I would love to see routinely available national data for context.”

- “If this data was available at a national level, it could help us see where we stand.”

Increased Transparency for Students and Families: Some respondents saw value in providing prospective students and parents with clearer information to support enrollment decisions.

- “Could support better transparency for families.”

- “Increase access to admissions and outcomes data for prospective students.”

- “Help support more informed decisions by students.”

Potential to Support Student Equity and Understanding: A few respondents highlighted how this data could improve understanding of college access, equity, and admissions practices.

- “Better understanding of how colleges serve students.”

- “Helpful in tracking outcomes for underserved populations.”

- “Can shed light on institutional admissions patterns.”

WHICH RESOURCES WILL BE HELPFUL IN COMPLETING THE ACTS REPORTING?

Training, Webinars, and Technical Support: Requests for free, accessible IPEDS-specific training and technical guidance via webinars, help desks, and documentation.

- “Free IPEDS training and a fully staffed IPEDS help desk.”

- “Webinars with real examples of how to report the data.”

- “Technical documentation and support from IPEDS.”

Clear, Precise, and Concise Definitions: Strong emphasis on the need for a clear, shared understanding of definitions and instructions to ensure consistent reporting.

- “A detailed data dictionary clearly explaining each element.”

- “Concise and precise definitions for all terms.”

- “A one-page definition guide would be helpful.”

Enhanced IPEDS Help Desk and Documentation: Calls for better responsiveness and documentation from IPEDS to support ACTS-specific inquiries and case-by-case interpretation.

- “IPEDS help desk that’s responsive to ACTS-specific questions.”

- “Written documentation that matches the reporting system exactly.”

- “Better integration of new ACTS requirements into existing IPEDS support.”

Staffing Capacity and Infrastructure Support: Respondents, especially those from smaller institutions, requested help expanding internal capacity to meet data requirements.

- “Available staff to manage and track these new data requirements.”

- “Large universities may have more staff—smaller ones need support.”

- “We need infrastructure, not just instructions.”

More Time and Staff to Meet the Requirements: Respondents indicated that time extensions and additional personnel are critical for managing the complexity and workload of ACTS reporting.

- “Another year to implement.”

- “Postpone for another 2 years.”

- “Being able to hire an additional analyst to gather the data.”

Deadline Extensions and Phased Implementation: A broader theme of needing more lead time, especially for onboarding new data sources or accommodating school calendars.

- “A longer time (possibly next year’s data collection).”

- “Extended deadlines for schools with different term structures.”

- “We need a soft launch before full implementation.”

HOW CAN AIR BEST SUPPORT YOU IN THIS EFFORT, IF AT ALL?

National Advocacy and Policy Representation: Respondents want AIR to take a strong advocacy role—representing institutions to the Department of Education and pushing back on burdensome or unclear policy.

- “Advocacy to correct/redirect a misguided data collection effort.”

- “Display moral courage and galvanize the effort to kill ACTS.”

- “Advocate on behalf of institutions and small offices.”

Best Practices and Peer-Driven Guidance: Requests for AIR to gather and share practical implementation examples, templates, or business-process insights from peer institutions.

- “Best business practice workshop utilizing the reporting elements.”

- “Practical guidance on how others are preparing.”

- “Templates and shared examples would be extremely helpful.”

Clear, Consistent Training and Webinars: Respondents want AIR to provide timely, accessible, and comprehensive training on how to implement ACTS reporting.

- “Training, webinars, and walkthroughs that break down ACTS data elements.”

- “Provide guidance based on facts, not assumptions.”

- “Comprehensive tutorials are needed.”

Delay or Redesign of the ACTS Rollout: A strong contingent of respondents is requesting that AIR advocate for delaying or rethinking implementation timelines or survey scope.

- “Advocate for a delay in implementation.”

- “Lobby the Department of Education to push the deadline.”

- “Support a redesign of the survey before implementation.”

Direct Support for Institutional Offices: Suggestions that AIR offer direct assistance like help desks, templates, or tailored outreach, to individual reporting offices.

- “Provide reporting templates for smaller offices.”

- “Lots of support will be needed for IR teams.”

- “IPEDs-like training for ACTS would help.”

Clarity in Definitions and Technical Specifications: Many respondents are looking to AIR for clarification around vague or shifting definitions and technical reporting requirements.

- “Help finalize clear definitions and instructions.”

- “Timely updates on technical specifications.”

- “Advocate for more realistic data definitions.”

ASIDE FROM CANCELLING IT, WHAT SPECIFIC RECOMMENDATIONS WOULD YOU OFFER THE DEPARTMENT OF EDUCATION TO REDUCE THE BURDEN OF THIS COLLECTION ON INSTITUTIONS?

Eliminate Historical Data Requirements: Respondents urged DOE to limit ACTS to future data collections only, excluding retroactive or 5-year historical reporting.

- “Only collect data for future years and not historical years.”

- “Require only current data rather than 5 years of history.”

- “Don’t ask for data we’ve never previously tracked.”

Reduce or Align Data Elements with Existing IPEDS Collections: Calls to streamline ACTS by using existing data already reported through IPEDS or other federal sources.

- “Reduce the number of data items that are being requested.”

- “Remove the graduation rates and financial data—it’s already in IPEDS.”

- “Limit to what’s already collected through existing surveys.”

Reduce Reporting Frequency or Required Elements: Suggestions to lessen institutional burden by trimming scope, reporting cycles, or disaggregated categories.

- “Reduce the number of elements collected in any given year.”

- “Eliminate duplicative requirements.”

- “Allow fewer reporting groups to simplify.”

Extend Lead Time for Data Preparation: Institutions need more lead time to build new systems and reporting pipelines to meet ACTS requirements.

- “We need time to build out systems for new data.”

- “Give us more time to prepare the data sets.”

- “Don't rush the implementation data takes time to get right.”

Delay Implementation to 2026–27 or Later: A significant number of responses recommended delaying ACTS implementation to the 2026–27 cycle or beyond.

- “Delay implementation until 2026–27.”

- “Push this back one or two years.”

- “We need a soft launch year to get ready.”

Set a Clear Start Date and Remove Immediate Requirements: Respondents emphasized the importance of a clean, clear launch date so institutions can prepare and know what’s expected in advance.

- “Start with the 2026–27 year only—don’t backdate it.”

- “Set a clear beginning point and don’t confuse things with 2023 or 2024 retroactive data.”

- “Push the start to a future year with clear communication.”

ASIDE FROM CANCELLING IT, WHAT SPECIFIC RECOMMENDATIONS WOULD YOU OFFER THE DEPARTMENT OF EDUCATION TO INCREASE THE QUALITY OF THE DATA WITHIN THIS COLLECTION?

Convene Technical Panels and Peer Review: Respondents recommended DOE involve institutional experts (especially from smaller institutions) to vet elements, processes, and feasibility.

- “Convene a technical review panel including small institutions.”

- “Make sure the data process is technically reviewed before rollout.”

- “Include practitioners in early review and testing.”

Phase-in Approach and Multi-Year Lead Time: Suggestions to phase in requirements gradually and give institutions time to build systems and improve accuracy over time.

- “Phase in over time so that we can begin to collect data with better accuracy.”

- “Allow several years to work out data quality and submission processes.”

- “The data will be messy for a few years—acknowledge that.”

Clear Definitions, Instructions, and Training: Clear, consistent, and detailed definitions are essential to ensure institutions interpret requirements the same way.

- “Provide clear definitions and consistent instructions.”

- “Specific and standardized definitions to improve consistency.”

- “Align with existing IPEDS guidance and terminology.”

Improved Clarity and Consistency Over Time: The clarity of expectations, definitions, and timelines must improve over time to reduce confusion and increase data quality.

- “Better definitions and more complete guidance.”

- “Clearer populations and consistent data years.”

- “Allow time to improve quality while providing clear documentation.”

Standardize Elements and Institutional Interpretation: Institutions need standardized definitions and documentation, particularly for elements like GPA, test scores, and high school metrics.

- “Define GPA and high school info clearly.”

- “Standardize how institutions should report certain elements.”

- “Make sure we all understand what counts and how to report it.”

Reduce Overly Complex or Excessive Reporting: Reducing the number of required elements or disaggregations would help institutions report more accurately and focus on quality.

- “Reduce the number of required data elements.”

- “Limit excessive disaggregation that leads to poor quality data.”

- “Too many reporting elements will hurt data quality—not help it.”

Methodology

AIR emailed links to an online survey on August 22, 2025, to 1,459 members. Of those, 322 responded for a response rate of 22%. In addition, we provided access to a limited set of questions via the AIR website and gathered an additional 261 responses. The survey closed on September 9, 2025.

Suggested Citation

Jones, D., Keller, C., and Ross, L, E. (2025). AIR Survey Results: Feedback to the Proposed Admissions and Consumer Transparency Supplement (ACTS). Brief. Association for Institutional Research. http://www.airweb.org/community-surveys.

About

AIR conducts surveys community surveys on a variety of topics to gather in-the-moment understanding from data professionals working in higher education.

Looking for more information about ACTS?

Image Descriptions

Chart 1

| Concern | Extremely/Very Concerned | Moderately concerned | Slightly/Not at all Concerned |

|---|---|---|---|

| Proposed timeline for gathering and reporting the data (2025-26 academic year) | 91% | 5% | 4% |

| Collection of 5 years of historical data | 88% | 7% | 5% |

| Insufficient resources/staff given the complexity of the proposed data elements. | 84% | 11% | 5% |

| Data definitions that are unclear or seem to vary from IPEDS surveys. | 83% | 11% | 6% |

| Lack of clear data definitions. | 83% | 11% | 6% |

| Challenges reporting unique data disaggregations not previously used in IPEDS. | 77% | 14% | 9% |

| Student privacy concerns due to multiple, granular disaggregations. | 73% | 16% | 11% |

| Lack of availability of data elements for graduate students. | 59% | 18% | 23% |

| Coordinating across multiple institutional departments and data systems to compile the data. | 59% | 21% | 20% |

| High rate of unreported race/sex data on admissions form since students opt out of reporting. | 54% | 23% | 23% |

| Lack of availability of data elements for undergraduate students | 50% | 25% | 25% |

| Navigating what student data can be shared between department. | 50% | 22% | 28% |

Chart 2

| Type | Data not available | Significant effort required | Minor effort required | Readily available |

|---|---|---|---|---|

| Parental education | 26% | 44% | 23% | 7% |

| Family income ranges | 21% | 55% | 17% | 7% |

| Test score quintiles | 20% | 30% | 37% | 13% |

| GPA quintiles | 10% | 38% | 41% | 11% |

| Pell grant eligibility | 6% | 25% | 39% | 30% |

| Race–sex pairs | 4% | 20% | 39% | 37% |

| Admission type (Early Action, Early Decision, Regular Admissions) | 4% | 16% | 42% | 38% |

| Average cost of attendance | 2% | 40% | 35% | 23% |

| Average cumulative GPA at end of academic year | 2% | 21% | 41% | 36% |

| Financial aid type and amount (need vs merit) | 1% | 59% | 27% | 13% |

| Graduation rates | 1% | 15% | 39% | 45% |

| Cohort status (applied, admitted, enrolled) | 1% | 15% | 32% | 52% |

Chart 3.

| Data not available | Significant effort required | Minor effort required | Readily available | |

|---|---|---|---|---|

| Parental education | 63% | 29% | 6% | 2% |

| Family income ranges | 47% | 43% | 7% | 3% |

| Test score quintiles | 43% | 40% | 13% | 4% |

| GPA quintiles | 28% | 42% | 23% | 7% |

| Admission type (Early Action, Early Decision, Regular Admissions) | 12% | 28% | 35% | 25% |

| Graduation rates | 6% | 37% | 32% | 25% |

| Race–sex pairs | 5% | 25% | 36% | 34% |

| Financial aid type and amount (need vs merit) | 5% | 60% | 26% | 9% |

| Cohort status (applied, admitted, enrolled) | 3% | 27% | 32% | 38% |

| Average cost of attendance | 3% | 46% | 33% | 18% |

| Average cumulative GPA at end of academic year | 2% | 24% | 41% | 33% |

| Graduate field-of-study/CIP Codes | 0% | 14% | 32% | 54% |

Chart 4.

| Type | Data not available | Significant effort required | Minor effort required | Readily available |

|---|---|---|---|---|

| Family income ranges | 23% | 58% | 14% | 5% |

| Test score quintiles | 20% | 34% | 35% | 11% |

| Ranges of high school GPA | 11% | 44% | 36% | 9% |

| GPA quintiles | 10% | 43% | 39% | 8% |

| Race–sex pairs | 6% | 20% | 40% | 34% |

| Admission type (Early Action, Early Decision, Regular Admissions) | 4% | 19% | 38% | 39% |

| Cohort status (applied, admitted, enrolled) | 2% | 19% | 35% | 44% |

| Average grant amount | 1% | 58% | 30% | 11% |

Chart 5

| Type | Data not available | Significant effort required | Minor effort required | Readily available |

|---|---|---|---|---|

| Ranges of high school GPA | 61% | 26% | 9% | 4% |

| Family income ranges | 48% | 42% | 8% | 2% |

| Test score quintiles | 39% | 39% | 16% | 6% |

| GPA quintiles | 24% | 42% | 28% | 6% |

| Admission type (Early Action, Early Decision, Regular Admissions) | 14% | 30% | 30% | 26% |

| Race–sex pairs | 5% | 20% | 40% | 35% |

| Average grant amount | 5% | 58% | 29% | 8% |

| Cohort status (applied, admitted, enrolled) | 4% | 26% | 33% | 37% |

| Graduate field-of-study/CIP codes | 2% | 15% | 33% | 50% |

Chart 6

| Hours | Aggregate |

|---|---|

| Less than 100 hours | 12% |

| 100 to 249 hours | 33% |

| 250 to 499 hours | 30% |

| More than 500 hours | 25% |

Chart 7

| Level | Aggregate |

|---|---|

| Strongly disagree | 39% |

| Moderately disagree | 33% |

| Neutral | 18% |

| Moderately agree | 7% |

| Strongly agree | 3% |