Released February 2024

In November 2023, the Association for Institutional Research (AIR) surveyed members to explore the use of generative artificial intelligence (AI) in the institutional research/institutional effectiveness profession. We collected 469 responses from 2,245 members for a response rate of 21%.

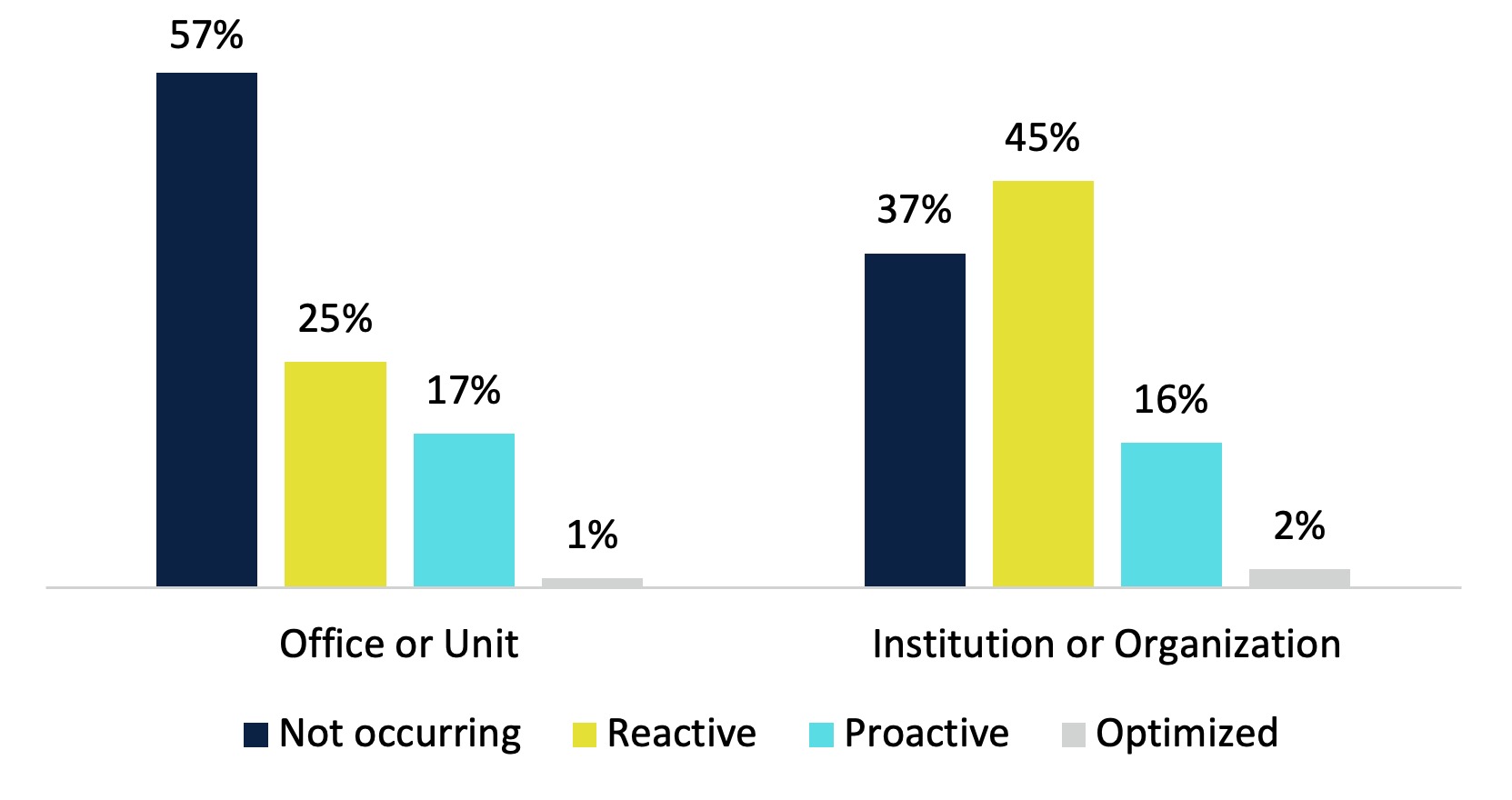

We asked respondents to estimate the generative AI maturity within their offices or units using a 4-point maturity scale. We found that most respondents (82%) rated their offices’ maturity as not occurring or reactive while 18% rated their offices’ maturity as proactive or optimized (Chart 1).

We also asked respondents to rate the generative AIR maturity of their institutions. While the same percentage of respondents rated their institutions’ as either not occurring or reactive (82%), the distribution skewed higher that is, a higher percentage of respondents rated the use of generative AI as reactive (45%) at the institution-level compared to their IR/IE office (25%).

Chart 1. Degree of Generative AI Maturity

Section 1. Comparing IR/IE Offices Based on Generative AI Maturity

Based on the respondents’ ratings of their IR/IE offices’ generative AI maturity, we separated them into two groups: those whose offices have higher generative AI maturity (proactive or optimized) and those whose offices have lower maturity (not occurring or reactive).

Next, we asked respondents four questions regarding the impact of generative AI on the IR/IE professional. Two of those questions were answered similarly between these two groups:

- Use of generative AI can simplify routine tasks/data processing.

- Use of generative AI can expand the capabilities of an office, such as exploring new techniques or data sources.

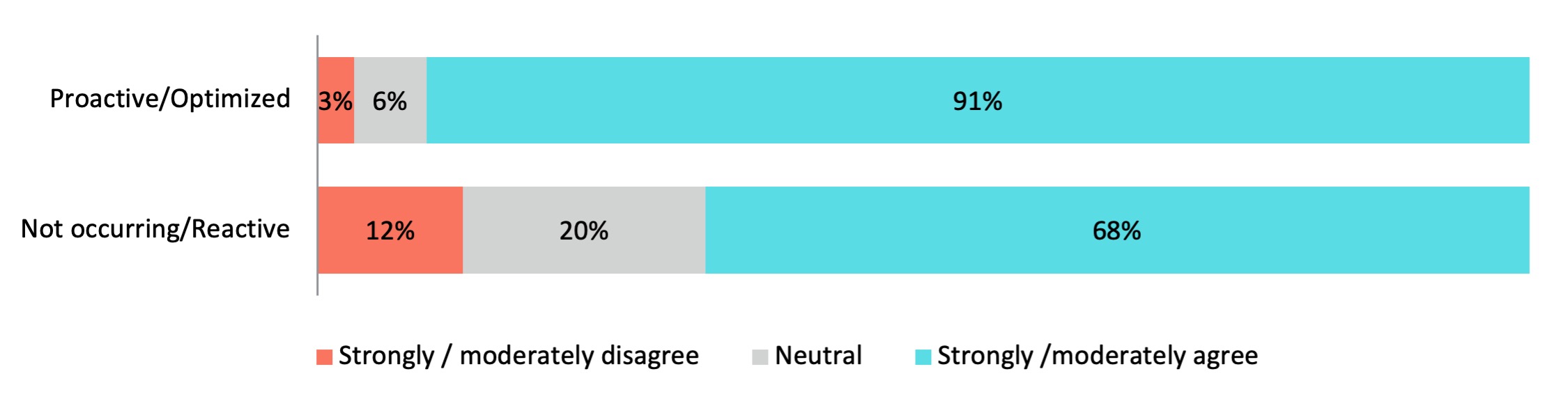

However, two questions were answered statistically differently (p < .05). Respondents from offices with higher generative AI maturity (proactive or optimized) were more likely to agree with the statement “Using generative AI can improve the efficiency of an office” than respondents from offices with lower maturity (not occurring or reactive; Chart 2).

Chart 2. Using Generative AI Can Improve the Efficiency of an Office

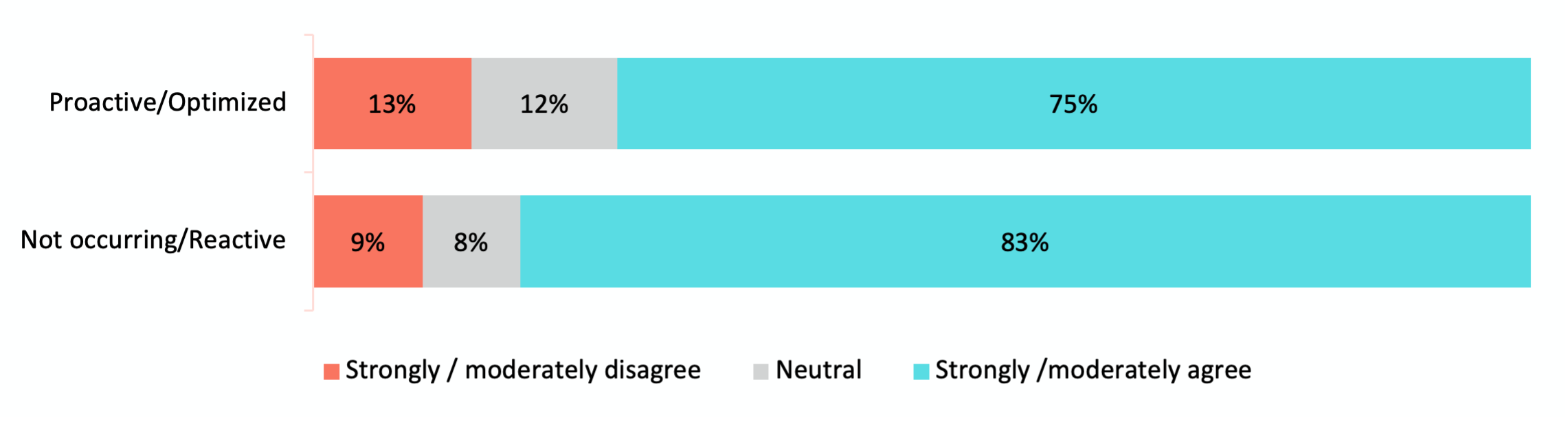

Respondents from offices with lower maturity (not occurring or reactive) were more likely to agree with the statement “using generative AI will require significant professional development” than respondents from offices with higher maturity (proactive or optimized; Chart 3).

Chart 3. Using Generative AI Will Require Significant Professional Development

Section 2. IR/IE Offices with Lower Generative AI Maturity

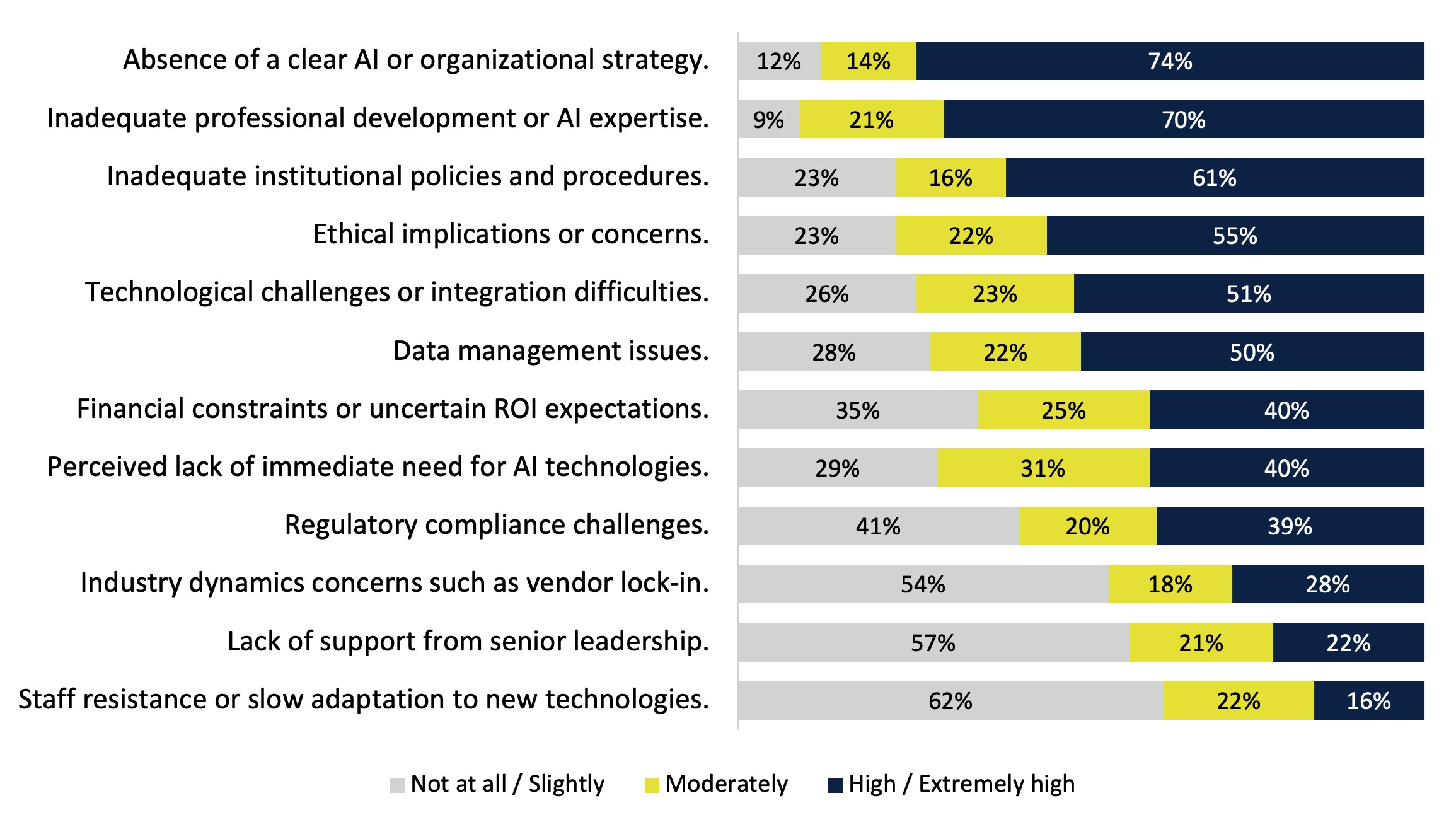

The absence of a clear AI or organizational strategy was the reason provided by the largest percentage of respondents (74%) from offices with lower generative AI maturity (not occurring or reactive) to explain the lack of use of generative AI. Inadequate professional development or AI expertise was the second most common reason for lack of AI use (Chart 4).

Chart 4. Degree that Items are Reasons for Lack of Generative AI Use

When asked what other reasons prevent their offices from using generative AI, several respondents mentioned a lack of time, being short-staffed, and a lack of understanding of how it could benefit their work.

Representative Comments

- Everyone is already doing more than should be expected, and this feels like 'one more thing'.

- Low staff capacity and competing demands. With one director and one analyst, responsibilities for federal, state, and other regulatory reporting, institutional planning, data governance, and accreditation leads to a very, very small amount of time for applying new technologies.

- Office has been understaffed with little time to dig into this. Our institution has convened a campus-wide committee to understand the impact of AI and how we might use it, so hoping to develop an office-specific plan over the next two years.

- We just need time to ensure generative AI doesn't negatively impact the quality of work or impact our stakeholders poorly. It can also be hard to pry into the black box of AI to ensure that what is generated is accurate and repeatable.

Staff resistance or slow adaptation to new technologies and lack of support from senior leadership were the least-reported reasons to explain lack of generative AI use in IR/IE offices.

Section 3. IR/IE Offices with Higher Generative AI Maturity

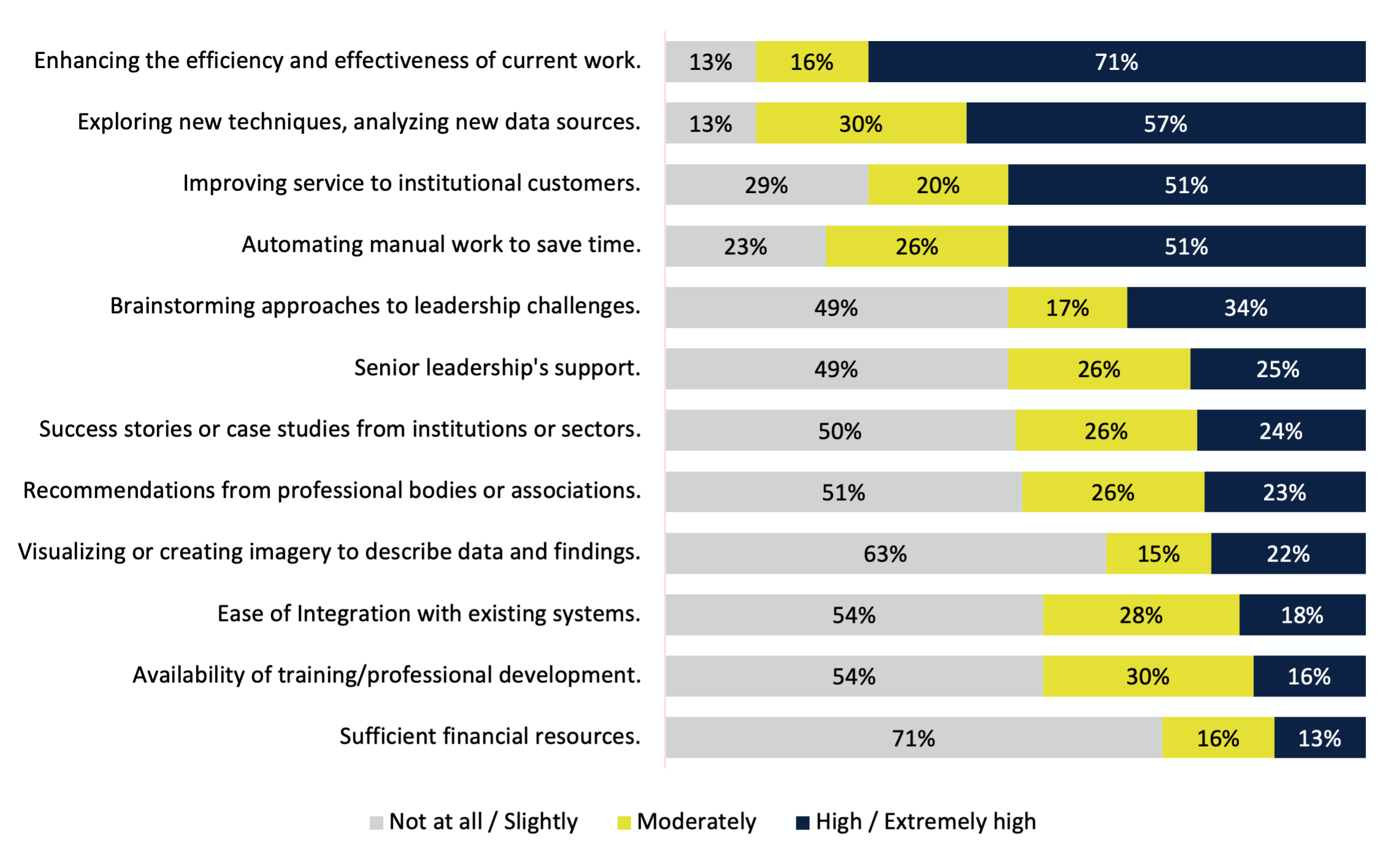

The role of AI in enhancing the efficiency and effectiveness of the office’s work was the reason provided by the largest percentage of respondents (71%) from offices with higher generative AI maturity (proactive or optimized) to explain its use. The ability to explore new techniques and/or analyze new data sources was the second most common reason for use (Chart 5).

Chart 5. Degree that Items are Reasons Why IR/IE Offices Use Generative AI

Of the respondents from offices with higher generative AI maturity, 109 identified 133 AI software solutions in use at their offices; of those, the majority identified ChatGPT (Table 1).

| Solution | % of Total |

|---|---|

| Chat GPT | 73% |

| Bing Chat | 5% |

| Claude | 5% |

| Bard | 5% |

| Canvs AI | 2% |

| Dall-E | 2% |

| Tableau GPT | 2% |

| AdAstra | 2% |

| Miscellaneous | 4% |

In addition, respondents identified 140 tasks performed with generative AI, from which five common themes emerge (Table 2).

| Task | % of Total |

|---|---|

| Development of content (e.g., code, emails, reports, summaries, templates) | 59% |

| Summarizing information (e.g., open-text questions, quantitative data, articles) | 20% |

| Researching information | 6% |

| Brainstorming/ideation | 6% |

| Image generation/graphic design | 4% |

| Miscellaneous | 5% |

We asked respondents to provide advice to individuals interested in adopting similar generative AI.

Representative Comments

- Try it, but don't let yourself get too distracted.

- Remember to not upload any identifiable institutional data including office names, institution names, staff or faculty names, etc. Unless you invest in a locked down enterprise LLM onsite (and you are willing to invest considerable time and energy to training the tool and validating the data) then be cautious of what promises this technology holds. Take the time to read, explore, and network with colleagues to be sure you (and your leadership) fully understand the implications of usage.

- Start now, be careful of using your institutional data due to security reasons.

- I would say to try things out. Just test it and see if it helps. If it doesn't, you haven't invested much time. If it does, you could save boatloads of time.

- Do not pretend that AI generated results are your own work.

- Be clear who owns the data you put in. Keep the AIR Statement of Ethics in mind when you consider using AI tools. Learn how to prompt [the] AI tool to 'train' it. Verify results with another tool. Apply good research techniques.

- Test AI's analytic ability on datasets (qualitative or quantitative) that you've already analyzed in-house so you can compare AI's performance to an IR analyst's performance.

- It is not going away. This is a tool that we should learn to use to improve what we do.

- BE CAREFUL and confer with cybersecurity professionals and legal and ensure that everyone understands what should and should not be put into these open source tools.

- Be cautiously optimistic. Lean into your expertise - you know what looks right. Align with ethical standards. Be willing to iterate. Maintain a prompt library of what works.

- Using it effectively requires that the user understands the subject matter at a very high level, because AI can generate inappropriate or misleading information

- Just start playing with it. At this time, generative AI is the new frontier, there's little to no documentation or classes to help make you proficient. Start small, asking it to search for things (Bing), then move up to help you write simple things like emails or presentation titles, then start testing the limits. Early adopters will become experts just through simple experience.

Section 4. Ethical Concerns Regarding the Use of Generative AI

We asked all respondents, regardless of their offices’ generative AI maturity, what ethical concerns (if any) they had regarding the use of generative AI. The comments provided span several themes.

Representative Comments

BIAS IN GENERATIVE MODELS

- Extreme ethical concerns exist around using generative AI/LLM trained on biased material that will then inject that bias downstream without appropriate citation or reflection. Generative AI can also be a stochastic parrot, spouting plausible and authoritative-sounding answers that end up being wrong when scrutinized. I usually enjoy being on the cutting edge of technology, but I cannot help but feel that similar to NFTs & ICOs/cryptocurrency, companies and institutions are racing toward AI under the fear of being left out, and not because it's the right thing to do.

- Since the algorithm is trained on information from the internet there are ethical considerations given social injustices entwined within the world wide web. In addition, we are just at the beginning of creating guidelines for plagiarism and appropriation.

- Bias in predictive models.

- Bias is a major concern when using these tools. Generative AI propagates ideals of the larger society which make these tools dangerous as they spread misinformation and inherent bias. Training in these tools becomes another task in addition to the professional development that is required to use them to increase efficiency in the office.

DATA SECURITY AND OWNERSHIP

- My biggest concern is where the data that is inputted goes. It is my understanding that anything entered into these systems becomes part of the system. That is unacceptable.

- The 'public' versions such as ChatGPT should not be used for any actual data analysis but just for brainstorming because the data becomes a part of the tools’ overall learning. Systems that say they have imbedded generative AI are even a little suspect because the tool still needs to be learned.

- It is tempting to use ChatGPT for analysis, but that means sending potentially sensitive data to the world. We are pursuing a product with cloud storage and high security standards.

- For using Bard, Chat GPT, or ATLAS.ti, campus data could be accessible by researchers outside of the institution, thus presenting some potential data privacy/ethical data use situations.

BLACK BOX ALGORITHMS (I.E., PREDICTIVE ALGORITHMS THAT ARE UNKNOWABLE OR UNTRACEABLE)

- Black box limits our ability to understand sources and methodology. That limits our ability to fully understand output/results and recommend actions.

- It would be tempting to use it for analysis of open-ended survey responses and other unstructured data, but I would really want to test the quality, which would be quite time consuming.

- I'm a bit worried about black box models and the sensitivity of how people will use the outcomes of models with students and interventions.

- I only worry when we are living in black box scenarios where we don't have any idea of what the underlying mechanism is to drive decisions.

SKEPTICISM OF COMPANIES’ MOTIVATIONS

- They say they are not using my data or storing it... they say.

- AI will put people out of work while funneling yet more money to the top, to the people who don't need any more money.

- Rampant copyright violation by AI tool creators, putting creative professionals out of work, bad product, inability to retain copyright/security of proprietary materials fed into AI

- Ownership of the final product generated using AI. AI getting credit for individuals’ work.

Suggested Citation

Jones, D. and Ross, L. E. (2024). The of Use of Generative Artificial Intelligence in Institutional Research/Effectiveness. Brief. Association for Institutional Research. http://www.airweb.org/community-surveys.

About

AIR conducts surveys community surveys on a variety of topics to gather in-the-moment understanding from data professionals working in higher education.

Image Description

Chart 1.

| Degree | Office or Unit | Institution or Organization |

|---|---|---|

| Not occurring | 57% | 37% |

| Reactive | 25% | 45% |

| Proactive | 17% | 16% |

| Optimized | 1% | 2% |

Chart 2

| Use | Strongly / moderately disagree | Neutral | Strongly /moderately agree |

|---|---|---|---|

| Not occurring/Reactive | 12% | 20% | 68% |

| Proactive/Optimized | 3% | 6% | 91% |

Chart 3

| Need | Strongly / moderately disagree | Neutral | Strongly /moderately agree |

|---|---|---|---|

| Not occurring/Reactive | 9% | 8% | 83% |

| Proactive/Optimized | 13% | 12% | 75% |

Chart 4

| Reason | Not at all / Slightly | Moderately | High / Extremely high |

|---|---|---|---|

| Absence of a clear AI or organizational strategy. | 12% | 14% | 74% |

| Inadequate professional development or AI expertise. | 9% | 21% | 70% |

| Inadequate institutional policies and procedures. | 23% | 16% | 61% |

| Ethical implications or concerns. | 23% | 22% | 55% |

| Technological challenges or integration difficulties. | 26% | 23% | 51% |

| Data management issues. | 28% | 22% | 50% |

| Financial constraints or uncertain ROI expectations. | 35% | 25% | 40% |

| Perceived lack of immediate need for AI technologies. | 29% | 31% | 40% |

| Regulatory compliance challenges. | 41% | 20% | 39% |

| Industry dynamics concerns such as vendor lock-in. | 54% | 18% | 28% |

| Lack of support from senior leadership. | 57% | 21% | 22% |

| Staff resistance or slow adaptation to new technologies. | 62% | 22% | 16% |

Chart 5

| Reason | Not at all / Slightly | Moderately | High / Extremely high |

|---|---|---|---|

| Enhancing the efficiency and effectiveness of current work. | 13% | 16% | 71% |

| Exploring new techniques, analyzing new data sources. | 13% | 30% | 57% |

| Automating manual work to save time. | 23% | 26% | 51% |

| Improving service to institutional customers. | 29% | 20% | 51% |

| Brainstorming approaches to leadership challenges. | 49% | 17% | 34% |

| Senior leadership's support. | 49% | 26% | 25% |

| Success stories or case studies from institutions or sectors. | 50% | 26% | 24% |

| Recommendations from professional bodies or associations. | 51% | 26% | 23% |

| Visualizing or creating imagery to describe data and findings. | 63% | 15% | 22% |

| Ease of Integration with existing systems. | 54% | 28% | 18% |

| Availability of training/professional development. | 54% | 30% | 16% |

| Sufficient financial resources. | 71% | 16% | 13% |